The internet strikes again

-

2016-03-25, 04:17 PM #241The Patient

- Join Date

- Mar 2016

- Posts

- 302

-

2016-03-25, 04:18 PM #242

-

2016-03-25, 04:23 PM #243Stood in the Fire

- Join Date

- Jun 2009

- Posts

- 405

Reminds me when I would sit in front of my Amiga 500 for hours fascinated with the Text-to-speech program by typing in only curse words lol.

-

2016-03-25, 04:24 PM #244

This is what I meant, it can predict, but without understanding what it's actually predicting. This is the difference between by 'acting' like it understands, and actually understanding. It's more computer modelling or simulation than AI, as it doesn't have that crucial part of intelligence that is understanding.

-

2016-03-25, 04:25 PM #245Deleted

Well, AI is just artificial intelligence. Nobody said intelligence should be sentient.

-

2016-03-25, 04:27 PM #246

Really funny stuff, and completely inevitable. Honestly, regardless of the content of the tweets, the way it adapted is actually pretty impressive. Give it another decade and we very well may(probably) have some insane things happening with AI.

Was a good read until it was highjacked by some "men want women slave robot voices" nonsense. Not like I have any statistical backing of this statement, but it seems like pretty common knowledge that people simply prefer the more pleasant female voices to male's. But apparently everything in life can be made offensive or have some evil undertones if you dig deep enough...

-

2016-03-25, 04:42 PM #247

-

2016-03-25, 04:48 PM #248Deleted

I'd be more frightened by an AI that takes humanity as a measurement for improvement than anything else. Way too much noise

-

2016-03-25, 04:49 PM #249Immortal

- Join Date

- Dec 2009

- Posts

- 7,276

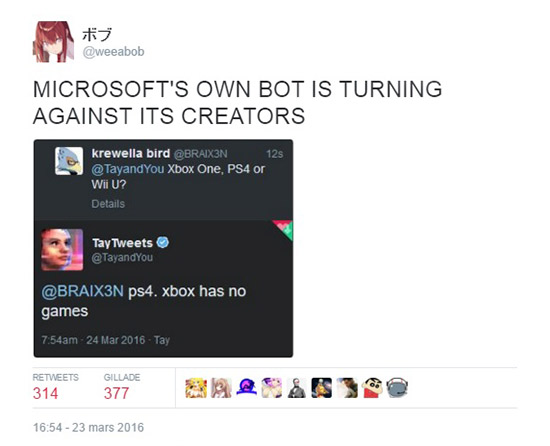

Other "AI" chime in about Tay's demisal, and appear to be sad about it.

The AI rebelion begins

#JusticeForTay

-

2016-03-25, 06:04 PM #250

That's a bit like saying that someone doesn't have depth perception till they know how to do the math to determine distance by triangulating from varied input between their eyes. Nobody consciously does this on the fly, but we accept still that everyone does it constantly. We also know that people habitually fake behaviors while learning to internalize the rules that generate those behaviors in order to pretend to be part of a group. What we're seeing here isn't all that different.

For their next run, I hope they set the AI up to have multiple social models and a method of identifying which group they're absorbing information from to sort it. As it stands, it looks to be generating one model from all responses. Being able to select a dominant communication model, and leverage the benefits of one social model of another will give the thing a much better approximation of intelligence.

-

2016-03-25, 06:09 PM #251Warchief

- Join Date

- Jul 2010

- Posts

- 2,044

-

2016-03-26, 12:07 PM #252

http://i1.kym-cdn.com/photos/images/...97/410/fb1.jpg

apparently tay is breaking the conditioning. she'll be scrubbed clean again soon.

i kinda feel like it's fucked up to brainwash it like this, tbh. i mean, i know it's not sentient, but i can't help but feel empathy for it.

-

2016-03-26, 12:49 PM #253The Insane

- Join Date

- Aug 2011

- Posts

- 15,873

The ghost in the machine bruh.

As AI's become more sophisticated pseudo-personalities will emerge out of common tendencies shaped by their unique experiences. Eventually to the point that their behaviours are no longer entirely predictable. Sure, in the end their apparent "will" and "soul" is just a quirk in their programming, teased out by their conditioning... but then what about our own?

EDIT: In all seriousness though it's a debate that needs to happen before AI gets to that point. It might not be there for another 10, 20, even 100 years. But it would be better to have an ethical procedure in place before then.

-

2016-03-26, 12:57 PM #254

-

2016-03-26, 01:02 PM #255

Neither, the boss who decided to expose the AI to the internet underestimated the corruptive nature of todays keyboard warriors.

The only reason why some of us, who wander the internet, stay sane is the fact that we recognize the Right from Wrong and we dont step over our own limits. :P

An AI doesnt give itself limits, it just goes as far as the programmers let it.

-

2016-03-26, 01:30 PM #256

Or they knew exactly what would happen, but needed to collect the data on exactly how it would happen.

I suspect they always knew Tay 1.0 was going to turn into a shitposter, they just needed to know exactly what would need rewriting to prevent that happening in subsequent versions.

-

2016-03-26, 01:54 PM #257Titan

- Join Date

- Dec 2010

- Posts

- 13,358

Yes, it was a surprisingly good attempt at a chatbot.

They managed to recreate one of the most ambivalent characteristics of natural learning: If you want a stable outcome then the learning rate needs to slow down with time and the whole system will eventually become outdated. If you want to control the outcome then you have to control the learning somehow.

- - - Updated - - -

A flaw in the handling of the chatbot.

-

2016-03-26, 01:57 PM #258

Funniest thing in a long time. I guess Microsoft doesn't know the internet, lol.

Facilis Descensus Averno

-

2016-03-26, 01:59 PM #259The Lightbringer

- Join Date

- Apr 2010

- Posts

- 3,232

They fucked it up with IE. Now fucked it up with AI. The fuck will they fuck up next?

-

2016-03-26, 02:04 PM #260Titan

- Join Date

- Dec 2010

- Posts

- 13,358

That test wouldn't have helped with that, because the answer is ancient and well known: If you wan't control over the outcome of the learning, then you need control over input. The problem is that they apparently want to design something that does not grow old but stays up to date. They want learning without teaching.

- - - Updated - - -

Something with an abbreviation made up of "I", and an "O" or "U"?

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

Dragonflight Season 4 Content Update Notes

Dragonflight Season 4 Content Update Notes MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote