Ethical cars as described in the hypothetical example of the OP will not be a thing. If they were to become so, it would then be trivial to kill most car owners by cooperating with a few handfuls of their worst enemies.

-

2016-06-26, 01:01 AM #61Deleted

-

2016-06-26, 01:03 AM #62

it should always preserve the lives of the people inside the car itself and as a secondary try to prevent as many outside casualties as possible

Be passionate about the craft, achievements, events and community.

But do not worship the machine, pedestal nor system.

You cannot afford to be blind, for yourself and others.

-

2016-06-26, 01:16 AM #63

I must say, this is a stupid question. Go figure why it's stupid.

-

2016-06-26, 01:44 AM #64Deleted

There never was a lesson of "in case of people suddenly appearing in front of your car, decide whether to ram them or take the suicide path" during a driver's license course. And there are no repercussions, as far as I know, if you not suicide when something suddenly appears in front of your car.

So ... we would teach a computer to try avoiding a collision at all cost while preserving the lives of its passengers.

I don't get where the foundation of this idea comes from, we do not have to tell the car to "Kill A or B", we just have to tell them to "Preserve A and B" as we would do it ourselves.

-

2016-06-26, 01:48 AM #65

Doubt they'd be able to pass such laws. People would riot that they bought a car that was supposed to protect them and now they're being forced to give up their life if two people jump in front of their cars. No one would use them at that point, you'd see a complete return to cars with drivers.

Additionally, if those were the norm, that what happens when a group of say two or three people decide to get their kicks by jumping in front of cars on purpose to force the car to crash? Are they responsible for the death of a passenger of a driverless car? If so, on what grounds? They didn't crash the car, the car did.

I agree with the poster above you though, unless the car puts the occupants lives as a priority, no one would buy them. As far as I'm concerned, if you don't obey common traffic laws and are in the street when a car is going through legally, you deserve what you get, even if that means death.

-

2016-06-26, 02:14 AM #66Their choice and opinions are as meaningless as their conviction.Most people questioned in a study say self-driving cars should aim to save as many lives as possible in a crash, even if that means sacrificing the passenger. But they also said they wouldn’t choose to use a car set up in such a manner.

Since no one wants to be fodder or have their children scrapped, no one will buy a righteous car so it won't be a thing. There is nothing to discuss here.

-

2016-06-26, 02:17 AM #67

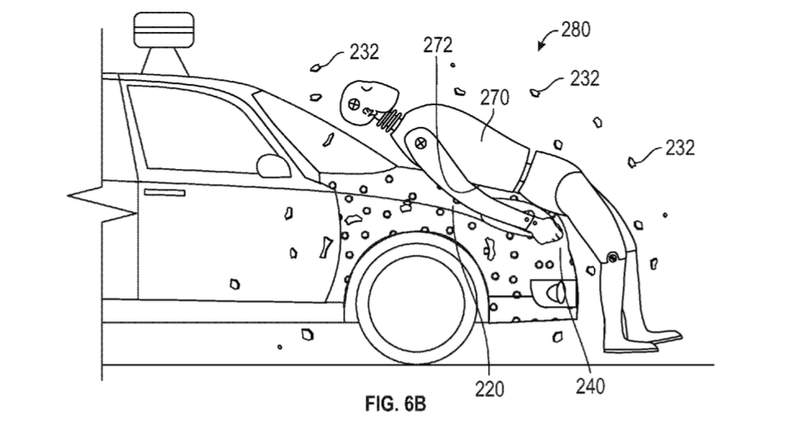

all these scenarios are written by someone with zero knowledge with self driving cars. they might as well ask the car to transform into a autobot and safely fly away. Self driving cars have proximity sensors that can detect people/objects/etc over 200m away. So why exactly would the car be on course to swerve into a bunch of pedestrians when it could have detected them and instead slowed down?

as for the question, i'd choose A because i'd want to protect the people in my car. I do not want to die for some jay-walkers stupidity. its not my fault he decided to walk in the middle of the road.

-

2016-06-26, 02:21 AM #68

They're incapable of making moral decisions.

-

2016-06-26, 05:14 AM #69

-

2016-06-26, 05:17 AM #70

No. They should go when they are told. Stop when they should stop. And follow traffic laws.

All the car should do is hit the damn brakes when it senses an object in front of it. Anything more is asking for a lawsuit. And why thre hell would the sensor not pick up a parade until the car was in imminent impact range?

Better sensors are the solution.

- - - Updated - - -

So random platoons of people regularly hop into traffic?

-

2016-06-26, 05:18 AM #71The Patient

- Join Date

- Apr 2016

- Posts

- 289

so this is basically like the trolley problem

-

2016-06-26, 05:21 AM #72

One last thing.

Who would buy a car knowing that if it came down to it, the car will destroy itself and kill you along with? What insurance company would I sure that car?

Holy shit this is stupid.

-

2016-06-26, 08:53 AM #73Elemental Lord

- Join Date

- Oct 2015

- Posts

- 8,072

The pedestrian detectors can allegedly detect cyclist as well https://www.engadget.com/2013/03/06/...eaking-system/ - and cyclists are prone to swerve in front of you unexpectedly. But the only sane choice is trying to stop the car - and not fully trusting sensors (is it a human or a big doll in the road?). After all, if people cannot spell cyclist how can we be 100% certain that there are no other errors?

Additionally the moral choice is avoided by not having sensors to see if there is a safe choice to turn a lot (and working on the rapid turns while avoiding roll-over which would be bad for the pedestrian).

-

2016-06-26, 09:25 AM #74Deleted

I actually work in the industry.

First of all, please understand that your (modern) car is capable of doing a much stronger break/steering intervention than you can do as a driver( there are safety reasons why the car doesn't allow you to do a full intervention). I think most people in this thread think they could do a better job than the automated driving instead, that's wrong.

Second, the whole premise of that exercise is a failure to begin with. You are in all the cases killing someone. The sensors on the car can pick up those people 200 meters away. The car can easily come to a full break from its preset legal speed in 200 meters. You're more likely to fail as a driver because you're drunk/sneezing/field of view impaired/etc than the car is in picking up those people and stopping. None would die.

Third. We're quite far away from full automated driving anyway, mostly because of people mindset. Since so few people would be willing to buy such a car, there is low demand and the car companies aren't really high on interest for full automation.

Think of the systems in the car not as "now i can go full stupid", or "take a nap while driving 500 kms", but more in the line of a kid jumps in front of your car outta nowhere and the car intervenes much faster than you can.

-

2016-06-27, 03:02 AM #75

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

Take a Dive into Plunderstorm’s Plundersurge!

Take a Dive into Plunderstorm’s Plundersurge! More permitted video sources

More permitted video sources MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote