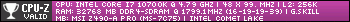

A couple of days ago some preliminary facts were leaked about Nvidia and AMDs upcoming 28nm mobile GPUs.

Nvidia

AMD

Now obviously this isn't hard evidence and doesn't say much about the potential of Desktop GPUs. But it might give us a solid implication of what to expect from the next-gen of GPUs.

Edit: Bear in mind that Nvidias list does not refer to the new Keplar architecture. Instead it refers to a Fermi shrink. "Keplar" models won't be out for another 3 months after.

Thread: 28nm mobile GPUs leaked

-

2011-08-25, 09:25 PM #1Deleted

28nm mobile GPUs leaked

Last edited by mmoc433ceb40ad; 2011-08-25 at 11:31 PM.

-

2011-08-25, 09:26 PM #2

Sweet mother of... Those wattages make me tingly.

-

2011-08-25, 09:48 PM #3Old God

- Join Date

- Aug 2010

- Posts

- 10,508

256bit GDDR5 is plenty even for ultra high end cards.

-

2011-08-25, 09:51 PM #4

the N13E-GTX is probably something like the GTX660M and all the lowend GPU are probably a die shrink of Fermi.

-

2011-08-25, 10:17 PM #5

Last edited by Asmekiel; 2011-08-25 at 11:14 PM.

-

2011-08-25, 10:37 PM #6

Out of idle curiosity, I think it'd be awesome to have a series of videos generally explaining what videocard differences are. For people like me, I have to doublecheck with you all because I get easily confused by this shit. :P

I can read the various clockspeeds, etc, but I can't say I find comparing them easy. :P

-

2011-08-25, 11:06 PM #7Titan

- Join Date

- Oct 2010

- Location

- America's Hat

- Posts

- 14,141

My understanding was that Nvidia was a long way off from releasing any 28nm graphics cards, both desktop or mobile. Maybe the die shrink problems are for desktops. Good to see that both companies are pushing for better power consumption, too bad the top end chips will be expensive and we will see more powerful models before the 28nm chips are widely used.

-

2011-08-25, 11:18 PM #8

Last estimates were Q1 for both companies, with AMD maybe even late december. Looking at the charts this seems about right. Starting mass production/shipping doesn't mean (high) availability.

The TDP of a GTX560M is 75W according to:http://www.notebookcheck.net/NVIDIA-...I.58864.0.html. Looking at the chart there hasn't been much change in this.

-edit- Grats on 1k posts

-extra edit- And hooray for MMO-Champion for getting the old smileys back. Three cheers for Boub!

-

2011-08-25, 11:20 PM #9Deleted

It's difficult to generalize what to look for in a GPU since the specs and relative performance change with every generation.

Most of the time you should be looking at specific models, rather than specs.

That said, there are a few general statements to be made.

Memory width: "Gaming" GPUs are defined by their 256 bit or higher memory interface. Anything with less is generally considered a "low-end" GPU and should really be considered for serious gaming. Generally, GPUs from 150 dollars upwards feature 256 bit memory width. 128 or wven 64 bit are reserved for low-end budget machines.

Memory size: Memory size is one of these aspects that steadily increases over time. Similar to normal RAM, Memory size dictates how much texture information a GPU can store and process at once. The general consensus is that with current graphics details, 1 GB of visual memory is suffice for any single-monitor Full-HD setup. More than this 1GB only translate into extra performance when used in combination with even higher resolutions and multi-monitor setups.

Memory type: Just like with regular RAM, visual RAM is updated on a regular basis, resulting in less power consumption, higher clock-rates or faster access times. Generally, the higher the suffix the better the RAM.

-

2011-08-26, 07:56 AM #10Old God

- Join Date

- Aug 2010

- Posts

- 10,508

The bus width and the memory type are all about memory bandwidth. If the bandwidth is enough, there is no reason to go beyond 256 bit GDDR5.

You may get away with a lower clocked memory for a higher bus width, since it produces the same bandwidth, but that's it.

We might see 128bit in the mid to high end segment again if Nvidia decides to use faster memory than GDDR5 (XDR2 for example).Last edited by haxartus; 2011-08-26 at 08:10 AM.

-

2011-08-26, 08:08 AM #11

Aha. I notice some folks around here overclock their stuff -- does this produce any useful results?

-

2011-08-26, 08:11 AM #12

Interesting but some things about this table's do seem rather odd.

Not sure if fake or not....

-

2011-08-26, 08:27 AM #13

-

2011-08-26, 08:55 AM #14Old God

- Join Date

- Aug 2010

- Posts

- 10,508

Actually, I think it might be AMD that will come first with the faster XDR2 memory (at least for HD7970). Nvidia is "fine" with their 384 bit GDDR5.

256 bit XDR2 sounds good enough.Last edited by haxartus; 2011-08-26 at 09:01 AM.

-

2011-08-26, 11:46 AM #15

I'm a regular semiaccurate reader and followed him while he was still at the inq., while charlie does edit model numbers and such to protect his moles. He has a pretty good record when it comes to early information. He has been very wrong in the past as well though. hence the site name.

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

We finally know WoW's sub count post-Legion! (kinda)

We finally know WoW's sub count post-Legion! (kinda) MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote