WoW is "multithreaded" but not quite like it could be. When WoW is using cores more evenly and well, and thus getting better fps when it should be able to - raids, then it's truly multithreaded.

-

2013-03-02, 09:23 PM #21

-

2013-03-02, 09:31 PM #22Scarab Lord

- Join Date

- Feb 2011

- Posts

- 4,030

It doesn't matter how well an AAA title is multi-threaded if current gen GPUs can't cause the CPU to be fully saturated. The fact of the matter is, only 50% of a 3570K's performance is required to fully saturate a top end modern GPU (680/7970 GE) in most AAA games that abuse the GPU. GPU technology doesn't improve all that fast anyways so unless game engines are redesigned to rely more heavily on CPUs, the multi-core argument is moot for the foreseeable future.

-

2013-03-02, 09:45 PM #23

That's not how the CPU to GPU relationship works. One will not hard wall another at an FPS ceiling. Has been discussed to death on these forums and elsewhere. <.<

This is why stuff like Furmark will still max out a video card even on super old CPUs like a C2Q, even though said C2Q and chips like the 3570k still get a single thread maxed out.i7-4770k - GTX 780 Ti - 16GB DDR3 Ripjaws - (2) HyperX 120s / Vertex 3 120

ASRock Extreme3 - Sennheiser Momentums - Xonar DG - EVGA Supernova 650G - Corsair H80i

build pics

-

2013-03-02, 09:56 PM #24Titan

- Join Date

- Apr 2009

- Posts

- 14,326

You 100% sure about that? Every single MMORPG has the same problem of raids being slow. Rift, SWToR, GW2... It's not exclusive to WoW.

So either every single team coding MMORPGs for the last decade are dumber than the armchair coders of this forum, or maybe the armchair coders actually have no idea of what kind of technical problems MMOs have. Which of those you think is the more likely scenario?Never going to log into this garbage forum again as long as calling obvious troll obvious troll is the easiest way to get banned.

Trolling should be.

-

2013-03-02, 10:32 PM #25

-

2013-03-02, 10:39 PM #26

I raise you http://i.imgur.com/3ap2G8c.jpg

-

2013-03-03, 12:33 AM #27

part of the reason MMOs have trouble with multithreading is that nobody has figured out a way around it, you don't need a masters in CS to come up with great ideas, a 5 year old playing with a raspberry pi could figure it out at some point, or an "armchair coder" could, we won't know until it happens, it's just a matter of obviously breaking the current paradigm, but i don't buy into the "I have my BS in CS and therefore i know more than you", those people are the cause of so many problems within computers

-

2013-03-03, 08:58 AM #28Titan

- Join Date

- Apr 2009

- Posts

- 14,326

Raspberry Pi has only one core so I doubt it teaches people multithreading :P

Anyway... With hundreds of people working on these games for more than decade and nobody figured it out yet, I personally think it's about time every armchair coder/whiner on this forum puts their money where their mouth is and starts selling their perfectly multithreaded MMO engine since it's so easy to make. Or alternatively shut up.

And when it comes to degrees, a big part of talented coders in gaming industry are self-taught hackers of the 90's demoscene. Maybe there should be few more with CS degree that would have formal thinking and theoretical knowledge instead.Last edited by vesseblah; 2013-03-03 at 09:05 AM.

Never going to log into this garbage forum again as long as calling obvious troll obvious troll is the easiest way to get banned.

Trolling should be.

-

2013-03-03, 09:43 AM #29

while i agree for the most part, oddly enough most breakthroughs come from people who are coming from an outside standpoint, so we should be looking for someone who is not a programmer at all, after all, Einstein was still a patent office clerk when he worked out special relativity

but i think MMOs in general are slightly harder than a normal game, personally if i was to look for the perfect MMO programmer, they would have extensive knowledge in multi-platform programming, networking, and gaming to enthusiast class computing, as well as compute and performance offloading, but anymore this is a tall order (i blame HR for this), finding someone with paper credentials in all those fields that has enough experience for a company to hire them is near impossible

as for degrees, to me it is just a piece of paper that says you paid some school a bunch of money and at least figured out how to pass tests one at a time, they are by no means a measure of personal skill or knowledge, i can't count the number of sysadmins with masters degrees i've met that don't know a trunked switch from a hole in the ground (or worse, the technician with a business degree)

-

2013-03-03, 10:04 AM #30Titan

- Join Date

- Apr 2009

- Posts

- 14,326

Even though Einstein was a drop-out from engineering school, he did get top grades for mathematics and physics so he did sort-of come from the field. Working as a clerk just gave him lot of free time to think and doodle without having to spend any time on the boring subjects he didn't like at school.

Somebody with CS degree might have theoretical knowledge of how to start solving problems instead of cowboy coder from demoscene who's extremely familiar with low level hardware optimizations. Especially when it comes to fields like multithreading which has been for the most part a matter of scientific calculations instead of getting the last fps out from discrete graphics chips. It's two very different approaches to programming.Never going to log into this garbage forum again as long as calling obvious troll obvious troll is the easiest way to get banned.

Trolling should be.

-

2013-03-03, 10:14 AM #31

i suppose the question i have is why can you have 64 player matches in FPS games just fine, but 25 man combat in WoW slows down, from a networking standpoint they are the same, the packetized information of a sniper round traveling through the air in BF3 is the same as a fireball cast in wow, so what specifically is going on in MMOs that brings the framerate down, when it doesn't in other multiplayer games

you've said it before that the CPU is essentially waiting for data from the server, but from my view (very limited programming) that is no reason for it not to crank out 100 FPS in the mean time

-

2013-03-03, 10:42 AM #32Titan

- Join Date

- Apr 2009

- Posts

- 14,326

Because FPS games cheat on that. On team games you have team uniforms, 1-5 different models and about half-dozen different weapon types. Original red vs blue games literally had one player model with two different recolored skins. When you think of WoW with tens of thousands of different types of wearable items with gems, enchants, upgrades and transmogs and 26 different character models it's not the same from networking standpoint, and definitely not the same from the game's standpoint when it has to render all players. That's also the big part why FPS games are possible to make on consoles but MMOs not. There simply isn't enough memory on current gen consoles to handle that amount of texture and model caching needed.

Last edited by vesseblah; 2013-03-03 at 10:44 AM.

Never going to log into this garbage forum again as long as calling obvious troll obvious troll is the easiest way to get banned.

Trolling should be.

-

2013-03-03, 10:54 AM #33

More calculations (buffs, talents, etc) when dealing damage/healing.

Addons amount.

Not as many people in actual sightrange on 64player fps games.

More different charactermodels (like vess said).

There are numerous things that are responsible for this. Think this is also the reason they simplified the game a bit, to save resources and make the damage more predetermined/predictable.

-

2013-03-03, 02:20 PM #34

Because you don't get scenes like this one in BF3:

There are a dozen visible PCs on screen, and another 13 behind the boss/the spell effects.

There are 36 visible debuffs on the boss, and a whole lot more that are not displayed because their duration is too low. Each player has a dozen buffs ticking one them, some have multiple debuffs. Those with debuffs are creating an arcane explosion graphic. The boss is casting a massively visible spell, while spell effects from 17 dps and a tank are all hitting him.

Lets break it down even further:

Say every dps and tank has 5 debuffs.

18x5=90 debuffs

Say everybody has 17 buffs, like I do:

25x17=425 buffs

Say there are 10 range dps, each having two spells in the air at one time:

10*20 = 20 spells in the air

There are 6 healers, each casting spells that have ground effects and other spell effects, lets say that they have two each at any one time:

6*2=12 healing spell effects

There are 25 PCs that are moving, plus pets and totems. Movement is calculated client side.

Those 25 PCs are wearing 16 pieces of gear, that aren't uniform.

25*16=400 pieces of gear

I'm sure I've missed some things too.

Compare that to a BF3 map with 64 players. They all look the same. They aren't all shooting all the time. You aren't even seeing a quarter of them at any one time and if they are behind walls or otherwise invisible they don't get rendered at all.

WoW loads a lot of the calculations on the CPU. This is the reason why people that buy the "i7 2600, 10GB RAM, GT630" rigs in Walmart can play the game and probably one of the reasons why so many still play it - they CAN still play it. That example PC wouldn't even start RIFT (as an example), let alone play it, and good luck playing BF3 with a GT630 in your computer.

That is why WoW (and MMOs in general) are so demanding when you move into large-scale events, and FPS are not. There is quite simply a lot more going on.Last edited by Butler to Baby Sloths; 2013-03-03 at 02:22 PM.

-

2013-03-03, 04:38 PM #35Scarab Lord

- Join Date

- Feb 2011

- Posts

- 4,030

You sir, have completely missed the point.

Put simply: Even if game engines are fully optimized to completely balance CPU load on all cores, it still doesn't matter how many cores AMD hands out because the bottleneck rests within the GPU and not the CPU. In the standard AAA FPS game, the bottleneck is so far from being a CPU bottleneck (50% 3570K multithread utilization for a 7970GE/680) that future GPUs, within the next 2-4 years, won't cause a CPU bottleneck in a 3570K unless the game engine is retuned to depend more heavily on CPU.

I would also add that a lot of optimizations can be performed on FPS games that can't be performed on MMOs due to the greatly increased variety of character models/maps. Doing such optimizations for a game like WoW would probably balloon the install to 100GB+.Last edited by yurano; 2013-03-03 at 04:40 PM.

-

2013-03-03, 04:47 PM #36

I can't explain yet why, but this doesn't always hold true. If what you say is true, that a 3570K doesn't get fully utilised to saturate a hd7970/gtx680, it should in theory not gain a single framerate when overclocking.

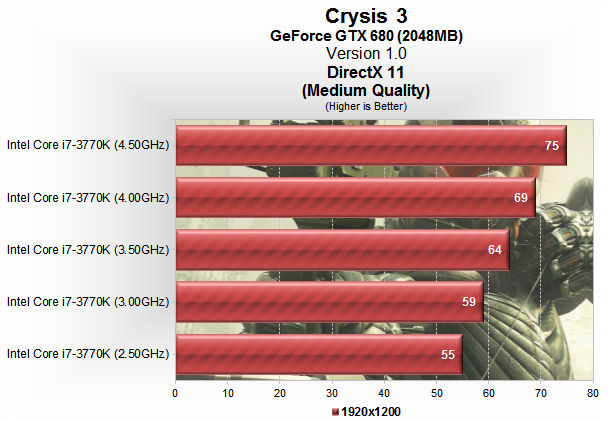

And yet, it does:

Not sure if the GPU load was 99% all the time though.

I used to also believe this though, but recent games have shown different results. Just like memory bandwidth is the cause for some previous unexplained performance issues on the GPU, there's gotta be an explanation for this aswell.Last edited by Majesticii; 2013-03-03 at 04:53 PM.

-

2013-03-03, 05:06 PM #37Scarab Lord

- Join Date

- Feb 2011

- Posts

- 4,030

Says right at the top: "Medium quality".

Plus, there's an issue of scheduling. Ideally, we'd want the CPU's task to end right before the GPU's task starts to reduce desync, latency lag. If the CPU finishes the next frame's timing/location calculations before the GPU is even halfway done with the current frame, there's going to be increased latency between whats actually happening in game logic and what you see on screen. As such, game engines employ a scheduler that utilizes prediction algorithms to start the CPU's task such that it will complete right before the GPU's is ready to start the drawing the next frame. As with all prediction algorithms, there are going to be inaccuracies. In particular, we're interested in when the CPU's task completes milliseconds after the GPU is ready for the next frame. In this case, increased clock (shortening the CPU's task time) increases framerates by reducing the impact of such scheduling impact.

In addressing memory bandwidth, there's always the issue of +1, a common problem in CS. Imagine a checkout lane at your local Walmart, each able to process 10 customers per hour. Even at a load of 5 customers per hour (not saturated), there's a chance that when you arrive at the lane, someone's sitting there being processed since these transactions (as with GPU transactions) aren't exactly periodic. As such, you're going to have to wait for the person currently being processed before your order is handled. In this case, if we increase the checkout lane's performance to 20 customers per hour at the same 5 customer per hour load, there's going to be a reduced chance that you'll encounter the previous non optimal condition. Moreover, if you do encounter another customer in the queue, the time you have to wait is reduced as well.

While these issues do make an impact, the impact is marginal. You can get some more performance by minimizing these errors, but they're largely trumped by any other real problem.Last edited by yurano; 2013-03-03 at 05:11 PM.

-

2013-03-03, 05:18 PM #38

Says below the graph "not sure if the GPU load was 99%". Also said I had no explanation yet, and was merely showing an exception to the statement.

That said, your explanation sounds plausible, but I'd have to investigate it myself to verify. Also i'd rekon with those schedulers you actually knowingly restrict your performance to cause no anomalies.

It would help if they'd test this out on lower and higher settings, so you could tell if the extra CPU power will benefit your current GPU setup. Might do that myself at some point to verify if i have time.Last edited by Majesticii; 2013-03-03 at 05:21 PM.

-

2013-03-03, 06:21 PM #39Deleted

also why is the aspect of overclocking not included in the comparison ?

-

2013-03-03, 08:23 PM #40Titan

- Join Date

- Apr 2009

- Posts

- 14,326

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

The War Within - Alpha Press Preview

The War Within - Alpha Press Preview MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote