Since this is still technically not something NVidia has actually talked about themselves, I'm not saying it's true, but I find it to be something we can definitely consider likely.

Here's the original article:

http://translate.google.com/translat..._58399310.html

Translated from Danish, I believe, so yes, the English is rough... Looking very interesting though!

-

2012-11-15, 06:53 PM #1

More GTX 780 info/rumors arising....

More GTX 780 info/rumors arising....

-

2012-11-15, 07:38 PM #2

Interesting, but I must admit, I can't see Nvidia releasing the 7xx desktop series so soon. We've already seen that all of next year's games can be run on the 6xx series, so most people won't even glance at the 7xx's.

I could see it coming out Q3/4 next year, but even then, games and technology haven't really moved forwards enough to benefit from this. However, people aiming for multi-screen setups and such are more likely the target audience, if so, perhaps they're just aiming to branch out more in that way instead.

-

2012-11-15, 07:39 PM #3

German. Not Danish. Otherwise, it's a little hard to tell if it's legit.

-

2012-11-15, 07:40 PM #4

You're not thinking about certain other things - 4k resolution is coming out. That will bring a 680 to its knees, especially if you want to run something like BF3 on that.

Not to mention, we'll need GPUs that natively have better I/O ports, higher level DisplayPort please.

A 680 isn't going to run a GPU-intensive game at 2560x1440, which I'd say is coming. Don't be so sure.

---------- Post added 2012-11-15 at 07:41 PM ----------

well, we saw that Swedish article ages back, but I hope this is real, would be very interesting.

-

2012-11-15, 07:42 PM #5

Tasty...so tasty. I want. Now.

-

2012-11-15, 09:39 PM #6Deleted

Hmmm, isnt Nvidia usually releasing cards with a xx4 in the mid range area. 104 was mid range if im not mistaken. And 110 is the new high end. Could be wrong tho.

And it wont be launched this year or even the first quarter. I think the factories should have some try outs already than. Tape out or w/e its called.

-

2012-11-15, 09:54 PM #7

Some other german computer site, which I trust way more than chip.de, already commented on this news and according to them, this should rather be a rumor with very little chances of being true.

The GTX 780 should be based on the GK110 rather than the GK114.

http://www.3dcenter.org/news/hardwar...-november-2012

(I don't know how to translate it with google in a link.)Last edited by Sarevoc; 2012-11-15 at 10:00 PM.

-

2012-11-15, 10:21 PM #8

While I understand where you're coming from, Sarevoc, I also wouldn't put it past nVidia to base their work on the GK110, but rename it due to a revision, minor or not.

-

2012-11-15, 10:34 PM #9Deleted

Not sure why they would release it so early. Even business wise it doesn't make sense.

-

2012-11-15, 10:37 PM #10

Some details are a bit unlikely; Like how they would still use high-quality memory when they widen the membus; Historically, going for higher-quality memory (read: so that they clock higher) is to off-set a smaller membus but to still get comparable performance (but not quite, esp when textures/resolutions increase).

To have both on the same card, seems very unlikely from a manufacturing cost-perspective. If they would be released like that, however, the performance would be outright massive. Kepler-600's weakness is just in memory and memory bandwidth.

And like Zeara points out, it's an GKxx4. I hold the same doubts; Fermi was GF100 and GF110 for their top-GPU. GK104 was because they expected to release the GK110. But considering the GK110 not being GK100, stranger things might happen.

Personally, I think this sounds more like a wish-list than reality.

-

2012-11-15, 11:21 PM #11

Meh rumors, rumors, rumors. There's a billion articles like those regularly posted on OCN and being discussed by the math-heads over there, but still no concrete info. Well, we can be assured it will at least be 384 membus (yay, caught back up to GTX580 I see?) and push shaders/cores way up, but how that will translate into performance....some people are saying 10-15%, the more optimistic people are saying up to 25-30%...christ the card would release at $600+ if it was that big a jump...

To hell with that, there is a solid reason I skipped on buying a 680 this time.

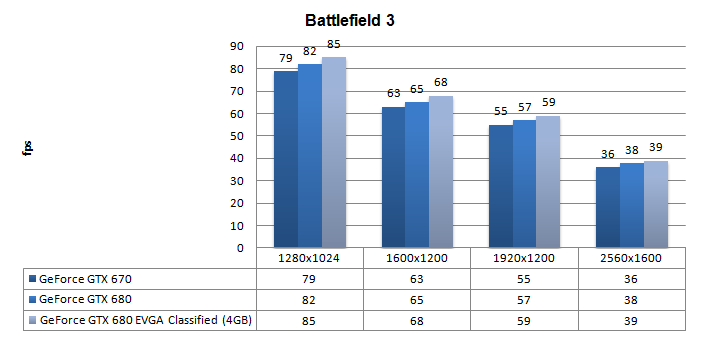

120hz monitors are getting more popular by the day, I'm not even going to post benches to prove that games aren't anywhere close to hitting the 100-120fps mark with even the fastest single cards in GPU-heavy games.

And forget multi-monitor, have you seen what happens games are run at 1600p?

This:

So yes, we still need a single-GPU card that can stand up to the true challenges. GTX680 was supposed to be that card, but it wasn't, so fingers crossed GTX780 gets there.

Can you believe we're still looking for a single card that can max-out the original Crysis at minimum 60 fps @ 1080p? Yeesh >_<Last edited by Xuvial; 2012-11-16 at 08:26 AM.

WoW Character: Wintel - Frostmourne (OCE)

Gaming rig: i7 7700K, GTX 1080 Ti, 16GB DDR4, BenQ 144hz 1440p

Signature art courtesy of Blitzkatze

-

2012-11-15, 11:47 PM #12

Doesn't Crysis lack the best coding though? I may be wrong but isn't Crysis 2 more graphics-intensive but better coded, thus gets better fps naturally?

-

2012-11-15, 11:52 PM #13Legendary!

- Join Date

- Sep 2009

- Location

- Not in Europe Anymore Yay

- Posts

- 6,931

Yep. Crysis 1 was horribly optimized, so was Metro 2033. Crysis gets used for benchmarks all the time but it really doesn't make a great benchmark, it just shows how poorly it was optimized when all these years later far superior hardware still has some issues with it.

Edit: Though on release Crysis 2 had taken some sloppy shortcuts to give the illusion of graphical quality, but a lot of those were improved in a later patch / optional download.

-

2012-11-16, 01:21 AM #14

Yup, Crysis isn't the most well-optimized game, but it goes for a different kind of visual/art direction compared to Crysis 2 - keep in mind I'm talking about C2 with DX11 + MaLDo textures, not the pathetic DX9 blurry-textured mess that was Crysis 2 when released. If you asked people which game looks better it would be like taking two recent Miss World winners and asking which was more beautiful, you would get a completely split response.

And talking performance, Crysis 2 + DX11 + MaLDo textures actually KILLS framerates just as much as the original Crysis, check out the benches. I personally feel that the fully-realized Crysis 2 does look a bit better, but the scenic shots from Crysis's tropical island and the way the soft light filters through palm trees is still orgasm-worthy.

I mean look at this, just fucking look at this (direct link to image):

http://img401.imageshack.us/img401/4...hot0000qw8.jpg

THAT'S how you do lighting without lens-flaring the player blind *cough* BF3 *cough*

Anyway enough of me harping on about Crysis, back to GTX780 :PLast edited by Xuvial; 2012-11-16 at 01:31 AM.

WoW Character: Wintel - Frostmourne (OCE)

Gaming rig: i7 7700K, GTX 1080 Ti, 16GB DDR4, BenQ 144hz 1440p

Signature art courtesy of Blitzkatze

-

2012-11-16, 08:19 AM #15Deleted

So this is the chip that was supposed to be the 680 eh...

Well, I've a 680 atm, So I'll just skip the entire 700 series. It's not like I'm going to upgrade to 4k soon anyway, that's for enthusiasts who already run at 1600p. I can live with 1080p for a while longer...

-

2012-11-16, 08:29 AM #16

Actually no, it was supposed to be the GK110.

But that turned out to be a chip they're using in their Tesla cards (i.e. non-gaming 3D rendering powerhouses).

Actually the code scheme is confusing, hang on lemme look up some posts on OCN attempting to explain it all -_-

Not three variants of Kepler but only one. Those are three possibilities of the codenames NVIDIA could use with the Kepler refresh.

GK104 would be replaced by either GK114, GK110 or GK204.

GK106 would be replaced by either GK116 or GK206

GK107 would be replaced by GK117 or GK118.

But as per my first post, GK208 if it ends up on GeForce 700 series would not match the performance of GK107 since its really low end. Hence we wont see a GTX 750 on GK208 but rather GT 720.

NVIDIA's Kepler Refresh is currently planned for Late Q1 - Early Q2 2013. Maxwell has been pushed to 2014 and overclock.net has known that for sometime now.I think they're making more and more sense with time

GK stands for Kepler architecture as GF stood for Fermi and GT for Tesla (and thus we can infer Maxwell codes will start GM), so its actually easier to tell whats in the GPU from the code name than the actual product number (for instance, many the really low end GeForce GT 600 cards are using GF108 and 119 GPUs...)

Then there's the number designation, where in the short time we've had them they've denoted class of GPU:

100

104

106

etc

where the ones column represents pecking order (ie 104 > 106), the tens column has come to represent a revision (ie 114 newer version of 104), but they haven't done much with the hundreds place so far, so it'll be interesting to see what this might mean as they finally start to get some more concrete structure to their codes.Last edited by Xuvial; 2012-11-16 at 08:34 AM.

WoW Character: Wintel - Frostmourne (OCE)

Gaming rig: i7 7700K, GTX 1080 Ti, 16GB DDR4, BenQ 144hz 1440p

Signature art courtesy of Blitzkatze

-

2012-11-16, 09:51 AM #17

That was kind of my point - the mass market isn't made up of x1440/x1600/multi monitor users. So it's hugely unlikely that they're going to go out of their way to push that at the moment.

The current generation is designed around x1080/x1200 users and that's where their cards sell like crazy. As you point out, it's obvious that their current cards just aren't designed to manage the higher resolutions or any other such. However, it's highly unlikely they'll do anything about that until Q3/4 next year at the earliest.

Even if the visuals of games in the second and third quarters take a huge leap up for some reason (not likely until the new consoles, or at least the new Xbox is clearly announced and in production stages), any cards they make will still be designed around catering for lower resolutions at higher speeds, with higher resolutions maybe starting to hit the 60fps mark as well.

More than likely any 790 they create will be aimed at the high resolution market, with a limited number made.

-

2012-11-16, 10:12 AM #18

Eeek don't say 790, that makes me think it'll be two 780's on a single PCB (i.e. SLI) which I want to avoid >_<

WoW Character: Wintel - Frostmourne (OCE)

Gaming rig: i7 7700K, GTX 1080 Ti, 16GB DDR4, BenQ 144hz 1440p

Signature art courtesy of Blitzkatze

-

2012-11-16, 10:30 AM #19

What's all this talk about 4k Resolutions? We still need 1440p to become the norm.

Playing since 2007.

-

2012-11-16, 04:24 PM #20

What's stopping you from getting one now? That you want 120Hz? Those are likely a wee bit off, still.

Either way, we need a renewal in the resolution-department. 1920x1080 and the FullHD-concept is one of the worst things that has happened to it, since once you reached, everything has stagnated. Do we need to push onwards post-4K? It's easy for me to say "no" at this time, but at the same time, ten years ago, I would've laughed at the idea of ever needing more than 2GiB of RAM or 1GiB of VRAM. Resolution isn't really translatable or relatable here, but we will be needing improvements. 2560x1600 in a 5" phone? Perhaps not. In a 15.6"-17" notebook? Yes. 4K in 24-30"? Yes. After that, I personally am of the opinion that we would just eat a performance-hit for no noticable benefit. Resolutions of 1440p and above severely diminishes the need for AA, however, which is a plus.

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

What WoW foods do you think would smell the best?

What WoW foods do you think would smell the best? MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote