-

2013-03-03, 09:04 PM #41Deleted

-

2013-03-03, 09:19 PM #42Titan

- Join Date

- Oct 2010

- Location

- America's Hat

- Posts

- 14,142

I think though, that in the next couple of years, multithreading is going to be very important to gaming and the computer industry as a whole. Next generation consoles are coming and will usher in a new era of graphics technology for the TV gamer market, studios are going to start optimizing processors for efficiency instead of doing what they do now for consoles and that is maximizing the CPU to it's fullest potential just to render the graphics properly, which isn't efficient for the processor itself. Advanced lighting, shading and textures are impossible on current generation consoles (Wii U excluded), physics doesn't work well at all because the systems can't process it. With the next generation systems, and PC's alike, physics and advanced graphics features will be available to all platforms. I think you will see a better use of PC technology since consoles will be competitive with it for a few years.

PC's aren't even optimized to run effectively since so many games are designed for consoles in mind and then ported to the PC, I think the opposite is going to happen with the next generation, since the PC will still be more powerful, but the technology in the consoles will all be pretty similar so they will design for the computer first and then port as needed. In terms of cost effectiveness, having all 3 consoles running on x86 next generation is going to help as well.

-

2013-03-03, 09:33 PM #43Mechagnome

- Join Date

- Sep 2009

- Posts

- 695

-

2013-03-03, 09:40 PM #44Titan

- Join Date

- Apr 2009

- Posts

- 14,326

When the new consoles come out those will be based on year old midrange PC hardware because of long development times and the requirement to push prices and cooling into reasonable level. My bet is those will be about on par with A10-5800 processor and Radeon 7670 series GPU, not much higher than that.

Never going to log into this garbage forum again as long as calling obvious troll obvious troll is the easiest way to get banned.

Trolling should be.

-

2013-03-03, 09:42 PM #45Mechagnome

- Join Date

- Sep 2009

- Posts

- 695

-

2013-03-03, 09:43 PM #46Titan

- Join Date

- Apr 2009

- Posts

- 14,326

-

2013-03-03, 09:58 PM #47Deleted

-

2013-03-04, 12:00 AM #48

the current expectation is that due to both the new consoles being X86 based, games will be developed for PC first, then ported down, how ever, in the case of teh PS4, with it running linux, and having direct hardware access, that means a port script could be made to easily port PS4 games to OpenGL for linux based computers

-

2013-03-04, 12:14 AM #49Titan

- Join Date

- Apr 2009

- Posts

- 14,326

Not gonna be that easy because linux doesn't really give direct hardware access to games any more than windows does.

That would be really nice, but remains to be seen. Porting code nowadays is trivial anyway since people use cross-platform engines like Unity to make the games anyway, problem is the texture quality which is done low for the consoles' limited video RAM. Making high res textures and scaling it down is lot more expensive than making low res textures and just keep feeding PC players shit like they do now.Never going to log into this garbage forum again as long as calling obvious troll obvious troll is the easiest way to get banned.

Trolling should be.

-

2013-03-04, 12:21 AM #50

-

2013-03-04, 12:24 AM #51Warchief

- Join Date

- Jun 2010

- Posts

- 2,094

Hmm I think this is going to be slightly offtopic but I like to share this video how to make your CPU running at 100% to gain more gpu loads in Crysis 3. Check nocturnals pics of the cpu loads where you can see the CPU isn't hitting it's limit yet.

It's only going to be useful if you use cards like a gtx 690 in sli or a titan in sli. A single 680 this video isn't needed but still worth it to check it out. Seems like he was able to push 12-15% extra on 3 680's each.

-

2013-03-04, 01:58 AM #52Scarab Lord

- Join Date

- Feb 2011

- Posts

- 4,030

Last edited by yurano; 2013-03-04 at 02:02 AM.

-

2013-03-04, 02:20 AM #53Warchief

- Join Date

- Jun 2010

- Posts

- 2,094

-

2013-03-04, 04:07 PM #54Scarab Lord

- Join Date

- Feb 2011

- Posts

- 4,030

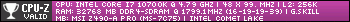

Background: AFAIK, a 3770K has 23 PCIe 3.0 lanes. Some are used for USB3/SATA bus so for purposes of PCIe cards, there are only 20 lanes available. For SLI/CF the lanes are split as follows: 16x for one card, 8x/8x for two cards or 8x/8x/4x for three cards (depends on motherboard). In addition, PCIe 3.0 8x has the same bandwidth as PCIe 2.0 16x.

http://i.imgur.com/4sYbB.jpg

The above linked image is a test done by /r/gamingpc comparing PCIe 2.0 16x/16x (equivalent to PCIe 3.0 8x/8x) vs PCIe 3.0 16x/16x for SLI of two cards. In the bandwidth limited case (PCIe 2.0 16x/16x) there's some reduction in performance at 1920x1080, albeit marginal. The performance penalty is much worse at 5760x1080. The Youtube vid you linked seemed to be using 1440p, which is roughly halfway between 1920x1080 and 5760x1080 in terms of pixel count.

We must further consider the fact that the Youtuber used SLI x3 (8x/8x/4x) instead of SLI x2. In the following link, the same bandwidth bottleneck is observed.

http://www.evga.com/forums/tm.aspx?m=1537816&mpage=1

-

2013-03-04, 05:24 PM #55Warchief

- Join Date

- Jun 2010

- Posts

- 2,094

Think you're making a big mistake. Lanes bottleneck means you never can get higher gpu loads, in his case he did reach higher gpu loads. The lanes aren't the bottleneck here but the CPU was, hence why it was running at 100% and gpu's were chilling at 60% load.

He can use boards like the Maximus V extreme which supports Quad sli easily 8x/8x/8x/8x because of the plx chip. Plx chip adds lag or to be more precise it's just going to increase the frame render latency and this stands apart from the loads again.

A gtx 690 in a single pci express slot, runs at 16x or 8x? Not sure about this. If that runs at x16 and you add another 680 so you have 8x (690)/8x(680) so I don't see the problem here?

-

2013-03-04, 05:30 PM #56

-

2013-03-04, 05:32 PM #57Scarab Lord

- Join Date

- Feb 2011

- Posts

- 4,030

-

2013-03-04, 05:56 PM #58Warchief

- Join Date

- Jun 2010

- Posts

- 2,094

Yes he is using a Maximus V extreme. http://www.youtube.com/watch?v=YUKathtsFEw

So your bandwidth limitation is invalid.

---------- Post added 2013-03-04 at 06:57 PM ----------

Seems like it's only possible with a 6990 + 6970. So no I guess.

-

2013-03-04, 06:57 PM #59

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

Season 4... Just old dungeons and new ilvl?

Season 4... Just old dungeons and new ilvl? MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote