Thread: Post your gaming setup!

-

2011-12-31, 12:22 AM #5101

-

2011-12-31, 12:25 AM #5102

-

2011-12-31, 12:30 AM #5103

Quite a lot, world record is held now by the 7970 for single GPU in HWBot afaik.

Some crazy hit 1700Mhz as well.Digital Rumination

Plays: Sylvanas EU - Fierae (Druid) | HotS | EVE | PUBG

Played: Rift | Guild Wars 2 | SW:TOR | BF4 | Smite | LoL | Skyrim

Ryzen 1920X - 32GB - 980Ti SLI - PCIE NVMe 1GB SSD - Enthoo Primo - Full WC - 4K

-

2011-12-31, 12:38 AM #5104

Anybody care to explain exactly what this means though:

Does it mean that they simply disabled part of the graphics card? Originally Posted by JCPUser@overclock.net

Originally Posted by JCPUser@overclock.net

-

2011-12-31, 12:43 AM #5105

-

2011-12-31, 01:10 AM #5106Titan

- Join Date

- Oct 2010

- Location

- America's Hat

- Posts

- 14,143

-

2011-12-31, 01:57 AM #5107

-

2011-12-31, 01:05 PM #5108

Either that, or it could mean that they are actually intentionally limiting it, so they can push out a newer GPU later, when nVidia pushes Kepler. Because nobody expects the Spanish Inquisition.

It would be quite brilliant. Lulling nvidia into a false sense of security, and then bam. We'll see what happens later. If this in fact is true at all, of course!

(Also. It's much less expensive to develop a single GPU and cutting and limit it rather than several different.)

-

2011-12-31, 02:04 PM #5109

The thing is, without knowing the specs of the Kepler cards, it could end up going really well for AMD, or really badly!

Digital Rumination

Plays: Sylvanas EU - Fierae (Druid) | HotS | EVE | PUBG

Played: Rift | Guild Wars 2 | SW:TOR | BF4 | Smite | LoL | Skyrim

Ryzen 1920X - 32GB - 980Ti SLI - PCIE NVMe 1GB SSD - Enthoo Primo - Full WC - 4K

-

2011-12-31, 02:07 PM #5110

Why?

I mean, it's not like AMD is as afraid of nVidia as the other way around.

And there have been leaked speccs already.

-

2011-12-31, 04:21 PM #5111

Specs of course, specs don't always mean performance.

It could go badly if the nVidia cards wipe the floor, even with this unknown AMD card. Neither company are really afraid of each other I don't think as it's quite clear what their target markets are.Digital Rumination

Plays: Sylvanas EU - Fierae (Druid) | HotS | EVE | PUBG

Played: Rift | Guild Wars 2 | SW:TOR | BF4 | Smite | LoL | Skyrim

Ryzen 1920X - 32GB - 980Ti SLI - PCIE NVMe 1GB SSD - Enthoo Primo - Full WC - 4K

-

2011-12-31, 04:41 PM #5112

The high end segments doesn't really matter for anything other than prestige. AMD could drop their interest in the higher end (HD x8xx and higher) and likely increase their profit.

It would only matter for the gamer and enthusiast base, and that's a very small part of the PC world. And many, many gamers hold on to their GPUs for many years.

-

2011-12-31, 04:53 PM #5113

-

2011-12-31, 04:53 PM #5114

He must've mixed up with HD 7000 series.

-

2011-12-31, 04:56 PM #5115

-

2011-12-31, 04:57 PM #5116

A month? More like, late spring early summer. Last I heard, it's "May". However, nVidia is said to be releasing them ground-up, meaning low-perform and mobile hitting first, and ending with their flagship.

Whether they start this in May, or the highest end hits in May, I do not know.

So much for the people promising the GTX 600-series would be released before the fall. Of 2011.

---------- Post added 2011-12-31 at 05:58 PM ----------

January for the HD7970, highest end. Find reviews in the big thread about it.

The rest are likely announced in January and released in February.

Preliminary guesses here.

-

2011-12-31, 05:04 PM #5117

nVidia should be Q2, but they offset their quarters like a month behind for some reason - so Q1 is like feb-april, Q2 is may-july etc. afaik.

Digital Rumination

Plays: Sylvanas EU - Fierae (Druid) | HotS | EVE | PUBG

Played: Rift | Guild Wars 2 | SW:TOR | BF4 | Smite | LoL | Skyrim

Ryzen 1920X - 32GB - 980Ti SLI - PCIE NVMe 1GB SSD - Enthoo Primo - Full WC - 4K

-

2012-01-01, 02:03 PM #5118

So, just bought LA Noire, and it seems to run at a unplayable FPS (10 idle), even though I should have more than the minium requirements. Is this a known problem or just me? I have all my settings lowest btw.

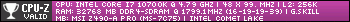

Should of posted specs:

GPU - GT 220

Some random 4gb of ram

CPU - Pentium (R) dual-core cpu e5200Last edited by Poppasan; 2012-01-01 at 02:06 PM.

-

2012-01-01, 02:05 PM #5119

I forgot what your rig looks like, Poppasan, so it's hard for me to tell.

-

2012-01-01, 02:24 PM #5120Deleted

The GT 220 is an incredibly weak and outdated card. I see nothing weird in it running L.A Noire on such low framerates, whatever the resolution might be.

The minimum requirements has a 8600 GT listed, which to my knowledge is more powerful than a GT 220. Perhaps if you bring all settings as low as possible and run it at 1024x768 the framerate will rise to "playable"; say 15-20fps.Last edited by mmoc7c6c75675f; 2012-01-01 at 02:31 PM.

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

Seasonal Poll: What Playable Race would u like to have in World Soul saga?

Seasonal Poll: What Playable Race would u like to have in World Soul saga? More permitted video sources

More permitted video sources MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote