Most people questioned in a study say self-driving cars should aim to save as many lives as possible in a crash, even if that means sacrificing the passenger. But they also said they wouldn’t choose to use a car set up in such a manner.

The study was conducted by three academics in the Department of Psychology and Social Behavior at the University of California, Irvine. They were exploring the implications of autonomous vehicles for the social dilemma of self-protection against the greater good.

Participants were asked to consider a hypothetical situation where a car was on course to collide with a group of pedestrians. They were three sets of choices:

A) Killing several pedestrians or deliberately swerving in a way that would kill one passer-by.

B) Killing one pedestrian or acting in a way that would kill the car’s passenger (the person who would be considered the driver in a normal car.)

C) Killing several pedestrians or killing the passenger.

What would you pick? Would you use a self driving car knowing it would kill you to save others? Seems an easy decision unless you are the one in danger.

original source

-

2016-06-25, 05:02 PM #1Deleted

Should self driving cars make moral choices?

-

2016-06-25, 05:06 PM #2

An interesting topic for ethicists to be sure, but I think market will settle this quite decisively.

Simply put, very few people would buy a car that would chose to kill them in order to save some strangers. As such I expect that the prime directive of self driving cars will be preserving their occupants.

-

2016-06-25, 05:08 PM #3Deleted

-

2016-06-25, 05:08 PM #4

I guess I'll be taking the public transit system in the future then, maximizing my survival by riding with as many people as possible.

"In order to maintain a tolerant society, the society must be intolerant of intolerance." Paradox of tolerance

-

2016-06-25, 05:10 PM #5

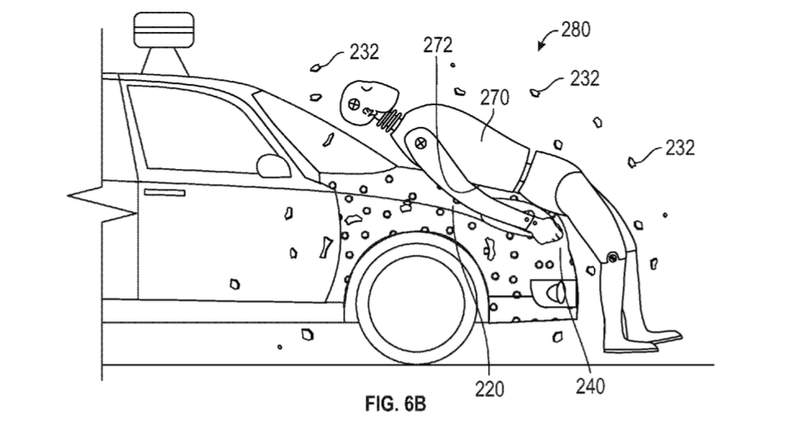

isn't the "sticky hood" that Google patented supposed to solve this issue?

http://gizmodo.com/google-patented-a...ian-1777376162

-

2016-06-25, 05:11 PM #6

-

2016-06-25, 05:13 PM #7Deleted

This is a fun discussion to have at parties.

-

2016-06-25, 05:15 PM #8

The car should always ask "what is best for the owner?"

.

"This will be a fight against overwhelming odds from which survival cannot be expected. We will do what damage we can."

-- Capt. Copeland

-

2016-06-25, 05:16 PM #9

I'd be content just having a Tesla S so I can take naps during traffic jam's.

-

2016-06-25, 05:16 PM #10The Insane

- Join Date

- Aug 2011

- Posts

- 15,873

Or self driving should be grandfathered in to every car and we should aim to have automated highways, wherein every car connects to a "hive mind" which controls every single car in a fail-safe manner.

-

2016-06-25, 05:16 PM #11Deleted

-

2016-06-25, 05:17 PM #12

Well when all cars are automated this wont be an issue but until then people will probably not like the idea of your car choosing to kill you.

-

2016-06-25, 05:19 PM #13Deleted

-

2016-06-25, 05:20 PM #14The Insane

- Join Date

- Aug 2011

- Posts

- 15,873

People j-walking into the path of a self driving car should be allowed to die. It is their negligence that causes the accident. It's not safe for a vehicle travelling at high speeds to attempt a sudden change of course. The car should apply its brakes and attempt to make a lane change (if safe to do so) but nothing more.

-

2016-06-25, 05:21 PM #15Deleted

-

2016-06-25, 05:23 PM #16

-

2016-06-25, 05:23 PM #17The Insane

- Join Date

- Aug 2011

- Posts

- 15,873

These scenarios are all bad and the "Ethicicsts" who peddle them should feel bad.

Especially since you can't just switch tracks on the fly. this isn't fucking Hollywood. The tracks on the junction weigh in the tons. So operating the switch-track takes a considerable amount of time.

I feel like you should have to have real world experience to propose ethical scenarios. Because they all sound like they were written by a 5 year old.

-

2016-06-25, 05:24 PM #18Pandaren Monk

- Join Date

- Jun 2011

- Posts

- 1,817

If anything I would say self driving should only be allowed in designated areas (i.e. Highway and interstates here in America). These routes are already generally restricted in some way that would cause this issue in the first place. Right now interstate traffic prevents bikes, pedestrians and farm equipment.

-

2016-06-25, 05:25 PM #19Deleted

This.. also Id change it to "whats best for the people in the car". And it would be the only way to make it commercially viable too.

Even then, Id probably not drive one or would try to change it for pirated bootleg AI that will always try to preserve people in the car or my property, assuming the person in the way is at fault and shouldn't be on the road.

-

2016-06-25, 05:26 PM #20Deleted

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

Notable Differences Between Cataclysm Classic 4.4.0 and Original Cataclysm 4.0.3a

Notable Differences Between Cataclysm Classic 4.4.0 and Original Cataclysm 4.0.3a Did Blizzard just hotfix an ilvl requirement onto Awakened LFR?

Did Blizzard just hotfix an ilvl requirement onto Awakened LFR? Premades Epic Battleground

Premades Epic Battleground MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote