It will work on Nivida cards as well.

2min 57sec mark.

The fact this works on SOOO many cards and even Nivida's is insane. I feel this will take off way faster than DLSS since that feature is limited to the RX series cards.

With GPU shortages going on and this dropping it will extend the life of many cards by years. While not a good game its a great tech piece and them showing a 1060 hitting playable frame rates at 1440p/epic settings in GodFall is amazing.

legit excited for this feature.

DLSS will likely still be better, But FSR won't be limited by a small limited hardware pool and in the end gamers benefit by having the option of both.

This feature seems to be releasing on June 22nd.

-

2021-06-03, 02:41 AM #1

AMD drops a bombshell,FidelityFX Super Resolution and....

Last edited by Jtbrig7390; 2021-06-03 at 03:48 AM.

Check me out....Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing, Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing.

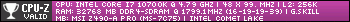

My Gaming PC: MSI Trident 3 - i7-10700F - RTX 4060 8GB - 32GB DDR4 - 1TB M.2SSD

-

2021-06-03, 02:48 AM #2

Having this in RDR2 will be great. THat game looks like crap on 1080p. It was clearly made for 4K on PC.

-

2021-06-03, 03:57 AM #3Check me out....Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing, Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing.

My Gaming PC: MSI Trident 3 - i7-10700F - RTX 4060 8GB - 32GB DDR4 - 1TB M.2SSD

-

2021-06-03, 05:23 AM #4

Sadly, I don't think it looks very good. The pan at ~3minutes in the video makes the "quality" preset look garbage on foliage.

-

2021-06-03, 05:35 AM #5

To be fair its also being used on a very low end video card and no clue if its the 6GB or 3GB version. So hands on testing will be needing, but people will be able to trade graphic's for performance like DLSS. Also Godfall is a fast pace game so those textures wouldn't really be noticeable. Getting such a low end video card to run at 1440p at playable framerates is a game changer.

Looking forward to messing with this setting myself on the 22nd.Check me out....Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing, Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing.

My Gaming PC: MSI Trident 3 - i7-10700F - RTX 4060 8GB - 32GB DDR4 - 1TB M.2SSD

-

2021-06-03, 05:54 AM #6

Just because it's okay in godfall doesn't mean it's okay in other games.

yes, it's fantastic they can get kinda-playable framerates from a 1060 at 1440p, but it still doesn't look very good. The people who'll care about this probably aren't trying to play fast paced games on an underpowered GPU, they're trying to play sightseeing games (on an underpowered GPU), and if that is the upscaling we can get, I doubt it's going to be used much

DLSS 1.0 was ridiculed for how shit it looked, and rightfully so. FidelityFX is hardly any better.

-

2021-06-03, 06:12 AM #7

Never said it was, I said we need to get hands on to really see how it works.

Seeing how the majority of people gaming on a PC are using a 1060, Yes they are trying to get playable framerates from a 1060. That was the whole point in showing a 1060 using this feature because just based on steam charts the most used GPU is a 1060 followed by the 1050ti. If it can make this much of a improvement at 1440p, it will make a bigger one at 1080p.yes, it's fantastic they can get kinda-playable framerates from a 1060 at 1440p, but it still doesn't look very good. The people who'll care about this probably aren't trying to play fast paced games on an underpowered GPU

https://store.steampowered.com/hwsurvey/videocard/

Out of the top 10 cards used only two are RTX cards and both are 20 series. This is a feature that will get used by the other top 8 cards and more.

This and DLSS is two different things and like I said we don't know how well FidelityFX will work until we get hands on with it.DLSS 1.0 was ridiculed for how shit it looked, and rightfully so. FidelityFX is hardly any better.

One thing we do know is this has a really good chance to extend the life of lower end GPU's and in a time where there is a huge GPU storage it's needed.Check me out....Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing, Im └(-.-)┘┌(-.-)┘┌(-.-)┐└(-.-)┐ Dancing.

My Gaming PC: MSI Trident 3 - i7-10700F - RTX 4060 8GB - 32GB DDR4 - 1TB M.2SSD

-

2021-06-03, 06:59 AM #8

-

2021-06-03, 12:09 PM #9

While am somewhat excited, am not waiting it to blow us out of the water to start with. Got to remember DLSS at start was absolute trash. But with time and some better development it might be good. Can see devs devoting more time for this than DLSS that doesn't even work on the most popular Nvidia cards. Then again Nvidia has the money and clout to force some devs to only use DLSS and their other ecosystems.

MMO-Champion Rules and Guidelines

-

2021-06-03, 12:51 PM #10

DLSS 1.0 was ridiculed, and Nvidia went back and did a lot of tuning and work to make it significantly better and now we have the somewhat respectable 2.0 version of the technology, FDFX is the first iteration from AMD, and quite honestly, regardless of how 'bad' it may be right now, based on the last 2 years or so i'm inclined to give them a lot more lee way to make improvements on it after the absolute giant leaps they have made going from the zen 1 platform to what we have now in the zen 3 architecture, not to mention the monster strides taken on the radeon side of things to where they are somewhat competitive with Nvidia at the high end for the first time in a long time, and if this new AMD technology matures like the Zen platform or radeon, sure it might take a little while but the gains will be very much worth it, and there's things AMD are doing right now that are putting both Intel and Nvidia to shame in terms of R'n'D/advanced technologies so it's just a matter of time.

-

2021-06-03, 01:33 PM #11

The 1.0 version isn't likely to be very good if DLSS is any indication. This is complex technology and being available on all GPUs might make it even more so. But AMD is on a roll when it comes to R&D so they might surprise us.

It's definitely a good thing that strides are made to bring this kind of feature to more than outrageously overpriced higher-end NVIDIA cards. DLSS isn't a must for most games, but for those that do benefit from the tech it's really great.It is all that is left unsaid upon which tragedies are built -Kreia

The internet: where to every action is opposed an unequal overreaction.

-

2021-06-03, 01:45 PM #12

I wouldn't draw too many conclusions about quality from a short video presentation, besides we'll know soon enough without having to speculate as the release date isn't far off.

Something to keep in mind is that AMD already has a better solution than DLSS 1.0 was. Standard upscaling + Radeon Image Sharpening produces better results than DLSS 1.0. I find it highly unlikely that AMD would have devoted a lot of time and effort into making a new technology that is worse or equal to what they already have.

-

2021-06-03, 06:59 PM #13

-

2021-06-05, 11:23 PM #14Titan

- Join Date

- Oct 2010

- Location

- America's Hat

- Posts

- 14,143

-

2021-06-06, 12:57 AM #15Legendary!

- Join Date

- Oct 2008

- Posts

- 6,560

In its first iteration here, it looks pretty terrible.

But DLSS was a dumpster fire at first, too, so, maybe theres hope for the future, as DLSS 2.0 is basically like voodoo magic.

-

2021-06-08, 10:41 AM #16

From my understanding it uses a simpler scaling algorithm, it's not advanced AI upscaling like Nvidia uses.

Cute, but basically pretty bad.

-

2021-06-08, 01:33 PM #17

If it's good it's a great boon to integrated graphics. Being able to actually run all games at 1080p would be awesome.

-

2021-06-09, 03:28 PM #18Herald of the Titans

- Join Date

- Apr 2019

- Posts

- 2,943

I wish they would stop focusing on graphics gimmicks, most of which are designed to improve low-performance GPU FPS, and focus on just giving us better rasterization/$ prices.

I wouldn't NEED dumb shit like DLSS if I could actually get my hands on a GPU that could run all modern games on a 4k monitor with max quality settings and over 120fps. Hell, you wouldn't need AA at all on a 4k monitor that is 32" or less because the pixel density is so high. We were close to obtainable 4k capable GPU this go-around, but covid fucked that up... and probably for good. Look at Nvidia with the 3080ti... they're just being the scalper themselves since demand is apparently inelastic.Last edited by BeepBoo; 2021-06-09 at 03:31 PM.

-

2021-06-09, 04:30 PM #19

-

2021-06-09, 04:53 PM #20Herald of the Titans

- Join Date

- Apr 2019

- Posts

- 2,943

"A long time" is more like 2-3 years. Not long at all. We got there with 1080, 1440, etc. We will get there with 4k at some point. I'd rather just run lower settings or a lower res monitor instead until shit gets there as opposed to technology that tries to simulate those actual graphic settings by limiting it to "only places it's noticed."

*Yet... it will. I'd rather they just focus on upping their core count and lowering their nm size instead of finding fake ways to hold-over. Until they get there, I'm happy to just wait and not play 4k. I don't do interim/holdover technology. example: I'll only buy an electric car when it's as good as a gas car (meaning when I can drive it to E without needing to plan ahead and can charge it in 5 minutes). I'd never consider a hybrid vehicle.Last edited by BeepBoo; 2021-06-09 at 04:59 PM.

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

AI-generated Fan Art Megathread - Create and share your character!

AI-generated Fan Art Megathread - Create and share your character! MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote