-

2020-11-01, 10:40 AM #701Stood in the Fire

- Join Date

- May 2010

- Posts

- 415

-

2020-11-01, 10:59 AM #702I am Murloc!

- Join Date

- May 2008

- Posts

- 5,650

1) Start is the most important

2) AMD has issues with almost every single release of the driver

3) A lot of bugs arent fixed for ages

4) GPU acceleration/AMD specific graphics features support has always been shit

- - - Updated - - -

Yea, AMD has had amazing hardware for years, but software has always held them back. And that has nothing to do with fanboism, a lot of people are simply not willing to deal with AMD GPUs, even though price/performance is usually better.

- - - Updated - - -

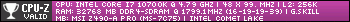

Let's not get too fanboish here. Freesync is literally VESA Adaptive Sync that AMD slapped their branding on with zero difference. Nvidia has had as much right to slap their Gsync compatible sticker on it.R5 5600X | Thermalright Silver Arrow IB-E Extreme | MSI MAG B550 Tomahawk | 16GB Crucial Ballistix DDR4-3600/CL16 | MSI GTX 1070 Gaming X | Corsair RM650x | Cooler Master HAF X | Logitech G400s | DREVO Excalibur 84 | Kingston HyperX Cloud II | BenQ XL2411T + LG 24MK430H-B

-

2020-11-01, 11:35 AM #703

-

2020-11-01, 11:39 AM #704Stood in the Fire

- Join Date

- May 2010

- Posts

- 415

-

2020-11-01, 11:42 AM #705

Got to admit it's a really long time since ATI was a thing, but from what I remember back then they were miles ahead of Nvidia at times with better drivers and they still got outsold. AMD has had some bad drivers, but not really that frequently. I'd said as frequently as Nvidia, but Nvidia has the money to fix things instantly, while from Hawaii forward AMD just had no money to put on anything.

Radeon 5000 launch was a bit iffy, but it wasn't really the drivers, but a hardware bug that caused almost everything. Granted that is a bigger oof by far.

All things considered am quite hopeful that they've gotten their things sorted out. Maybe we have to wait for a while(afterall they were still hiring for important roles in driver department not too long ago) to have everything running in good order. Guess we just have to wait and see how it goes. It's never a good idea to buy a GPU(or any tech) at launch anyways.MMO-Champion Rules and Guidelines

-

2020-11-01, 11:49 AM #706

I really want them to come out with a 3080Ti/Super with 12GB GD6X

maybe even on TSMC 7+, if possible

that would be my pick most likely

-

2020-11-01, 12:32 PM #707Keyboard Turner

- Join Date

- Nov 2020

- Posts

- 1

Good educated guess you've made right there, shows you know the stuff!

And I would like 6900xtx with ddr6x instead of plain ddr6 as well as wider databus and also on 5nm TSMC /s

This is not how things work dude. Chip design takes a lot of time, effort and is node specific. It is not as simple as printing, you can't just go to different fab and ask them to print you new chips. It would take some work AND a lot of money - masks are not free or cheap. So yeah they COULD do that but it would not be even remotely feasible from market perspective. They gotta stick to what they've got, maybe cut chips differently, maybe adjust pricing but I don't see them porting it to different fab. Not unless they find themselves in deep deep trouble. They might do "refresh" though down the line..

-

2020-11-01, 02:30 PM #708

From the rumors.. They are doing slightly cut down 3090 with 12 gigs of memory calling it a 3080ti. And a slightly cut down 3080 for a 3070ti. Hopefully they push the pricing down comparing the rasterization performance and just not trying to get a price premium for better DRX. And ofc AMD counters with price drops. And everyone rejoices for the competition.. Exept the ones who got a 3090, 3080 or a 3070.

MMO-Champion Rules and Guidelines

-

2020-11-01, 03:28 PM #709

I'm just hopeful AMD really beats 3080 in 3rd party benches, because it will make my upgrade - 3080Ti - come faster.

-

2020-11-01, 04:18 PM #710I am Murloc!

- Join Date

- May 2008

- Posts

- 5,650

Cut down 3090 chip (GA102-300) is 3080 chip (GA102-200). The difference between them is so small that I dont think there's space to make something in between. Making a GPU on 3090 chip but with 12GB of VRAM is going to kill off the 3090, which Nvidia wont let happen.

R5 5600X | Thermalright Silver Arrow IB-E Extreme | MSI MAG B550 Tomahawk | 16GB Crucial Ballistix DDR4-3600/CL16 | MSI GTX 1070 Gaming X | Corsair RM650x | Cooler Master HAF X | Logitech G400s | DREVO Excalibur 84 | Kingston HyperX Cloud II | BenQ XL2411T + LG 24MK430H-B

-

2020-11-01, 05:21 PM #711

-

2020-11-01, 05:27 PM #712The Patient

- Join Date

- Aug 2019

- Posts

- 227

-

2020-11-01, 07:18 PM #713

the difference between 3090 and 3080 is ~15%

enough room for a Ti with 12GB and 384-bit bus

-

2020-11-01, 08:06 PM #714I am Murloc!

- Join Date

- May 2008

- Posts

- 5,650

R5 5600X | Thermalright Silver Arrow IB-E Extreme | MSI MAG B550 Tomahawk | 16GB Crucial Ballistix DDR4-3600/CL16 | MSI GTX 1070 Gaming X | Corsair RM650x | Cooler Master HAF X | Logitech G400s | DREVO Excalibur 84 | Kingston HyperX Cloud II | BenQ XL2411T + LG 24MK430H-B

-

2020-11-01, 08:23 PM #715

Yeah, no it isn't. It uses Geforce drivers and is a Geforce card. Titans use professional drivers specific to them.

Well ye that's the rumor. That they are preparing to release a 9984 CUDA core GA102 with 12 gigs of memory. Which is barely cut down 3090 and really they should release it.. 6900XT is so close and is $500 cheaper. So matching AMD with a bit lower price would kill the 6900XT.. Unless AIBs find a way to clock the 6900XT higher with a lot more power.. Afterall there is a rumor of an Asus 6800XT hitting above 2.5GHz boost clocks.3080Ti with 384-bit bus and 12GB VRAM is literally 3090 with 12GB VRAM, so it's going to perform the same in gaming.

3090 will still remain for those that truly need the extra VRAM.MMO-Champion Rules and Guidelines

-

2020-11-01, 09:33 PM #716

Exactly right. The performance between the two is so slight, there's really no room for a 3080ti. I have the EVGA 3080 FTW Ultra, and OC'd it reaches almost what a stock 3090 can do. Vram hasn't yet been a limiting factor obviously, otherwise a 3090 with 24GB of DDR6x would benchmark significantly higher than a 3080. I never understood why people thought Nvidia would bring out the 3080ti with 20GB Vram. It would literally produce the same results as the current 3080, yet cost a lot more as well as bog down Nvidia's already limited production. It would make no sense.

AMD looks to have a strong lineup this time, which is awesome. Competition is great, and the end result is better options for everyone and often more reasonable prices. I'm reserving judgement until benchmarks though, as just because something looks great on paper doesn't mean it necessarily translates directly to real world results.

In the end, I don't care which is better. It's just cool we're at a point where there might actually be options.

-

2020-11-02, 12:27 AM #717

yeah i had the same thought about my second screen, but i've decided to sell it to a friend for a small price. That way he gets a decent screen for his 3070, and i get some cash towards the new GPU.

- - - Updated - - -

Yes they did cancel their double memory versions of the 3080/3090 due to supply line issues,

https://www.techpowerup.com/273637/n...070-16-gb?cp=2

So thats probably the route they will take, i much rather would have had them drop prices a bit, they owe us for punishing gamers for the false crypto boom.

-

2020-11-02, 05:33 AM #718I am Murloc!

- Join Date

- May 2008

- Posts

- 5,650

No, they dont. They use the same kind of driver other GeForce cards use.

Yea, rumors are stupid sometimes.

- - - Updated - - -

I dont think prices are that unreasonable this time, bar the 3090, which is supposed to be prohibitively expensive. AMD is not an option for me and a lot of people because of drivers and no CUDA (for the lack of alternative).R5 5600X | Thermalright Silver Arrow IB-E Extreme | MSI MAG B550 Tomahawk | 16GB Crucial Ballistix DDR4-3600/CL16 | MSI GTX 1070 Gaming X | Corsair RM650x | Cooler Master HAF X | Logitech G400s | DREVO Excalibur 84 | Kingston HyperX Cloud II | BenQ XL2411T + LG 24MK430H-B

-

2020-11-02, 06:28 PM #719Titan

- Join Date

- Oct 2010

- Location

- America's Hat

- Posts

- 14,143

-

2020-11-02, 06:53 PM #720

Recent Blue Posts

Recent Blue Posts

Recent Forum Posts

Recent Forum Posts

An Update on This Year’s BlizzCon and Blizzard’s 2024 Live Events

An Update on This Year’s BlizzCon and Blizzard’s 2024 Live Events Did Blizzard just hotfix an ilvl requirement onto Awakened LFR?

Did Blizzard just hotfix an ilvl requirement onto Awakened LFR? What's the state of PvP like today?

What's the state of PvP like today? MMO-Champion

MMO-Champion

Reply With Quote

Reply With Quote